The next generation of AI is all about neuromorphic computing and simulating the neural networks within the human brain to achieve artificial human cognition. We cover all the basics this week from how biological neural networks communicate to how silicon brains are challenging the traditional weaknesses of AI so that you can Live Easy, Innovatively.

The nervous system, neurons, and neural networks

The nervous system is the communication foundation between the brain and the rest of the body, transmitting signals from the brain to muscles and vital organs, for example; it defines everything from how we move and breathe to how we see and speak.

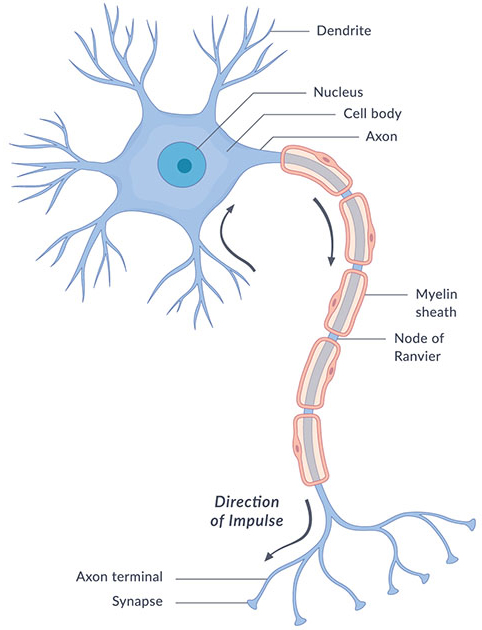

The brain and the spinal cord make up the central nervous system, while the nerves that then branch off the spine to the rest of the body make up the peripheral nervous system. At the base of it all is the nerve cell, or neuron, labeled below, of which the human brain contains roughly 100 billion.

Neurons across the body, in bundles called nerves, receive “messages” from other neurons as chemical signals at the dendrites. They then transmit the messages as electrical signals down the axon to the axon terminals, where they leave the neuron as chemical signals again, with messengers – neurotransmitters – that transmit the signals to neighboring neuron dendrites via the synapse. These widespread communication lines between neurons and nerves form the body’s neural networks.

By simulating the signal transmission process of neurons, artificial neural networks have now continued to take their place in the tech world, combining the concepts of artificial intelligence (AI) and human cognition to model the processes of the human brain.

Artificial neural networks for human computer interaction

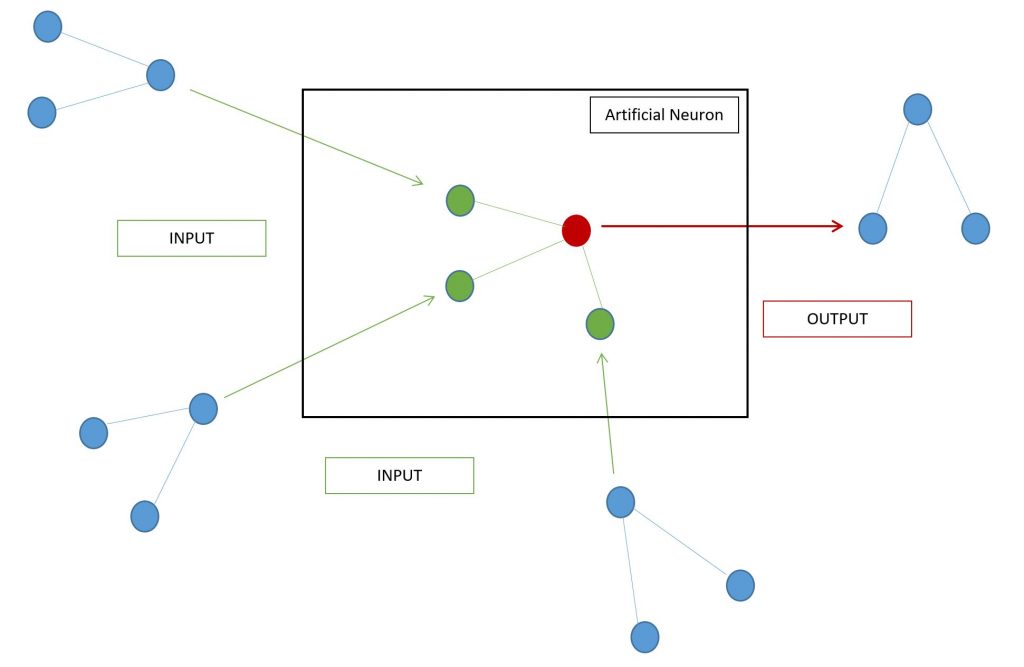

Studying the general properties of neurons to observe their behavior within neural networks has lead to attempts to mimic the brain’s learning processes by creating networks with artificial neurons. Each model neuron converts the pattern of incoming activities that it receives into one outgoing activity, which it sends to other model neurons in the network.

To make this communication possible, the synapse is modeled by an adjustable weight that is specific to the connection between the associated neurons. This way, the total input the neuron receives is determined by multiplying each incoming activity by the weight on the related connection and adding these values together for a lump sum value.

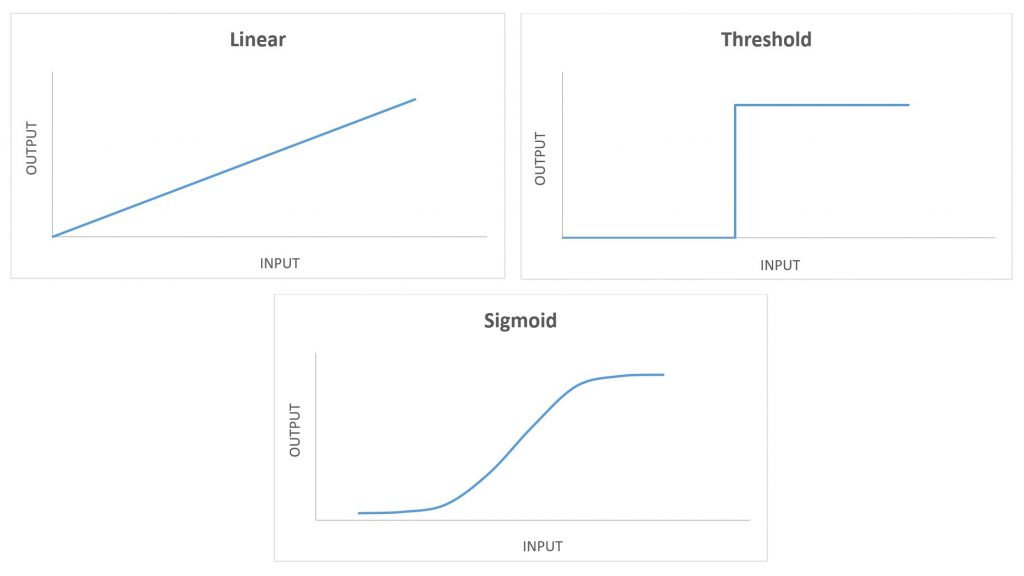

The neuron then applies an input-output function to transform that total input into an outgoing activity. The type of function applied – linear, threshold, or sigmoidal – determines just how input activity and weight on the connection affect the resulting output.

One common artificial neural network architecture, for example, applies three layers of information from input to output: input neurons, hidden neurons, and output neurons. Input neurons receive the incoming external information, transmitting it to the hidden neurons, which then send it to output neurons to be communicated to external units again.

At each layer, the artificial neurons can be trained to interpret various input activities uniquely, with a given response, so that achieving a desired output pattern is just a matter of adjusting masses (“synapses”) at the connection between those neurons.

Now, growing these intricately layered networks into complex, intelligent units continues to lead the study of human computer interaction (HCI) into neuromorphic computing in the journey to simulating human cognition.

Neuromorphic computing

Neuromorphic computing combines artificial neural networks (electronic analog circuits) to mimic the architecture and communication processes that occur in the biological nervous systems. It’s also a driving force behind the coming next generation of AI heading into artificial human cognition.

Current AI solutions face a known weakness: neural network training and inference depends on literal, deterministic views of events by systems without context or common sense. This makes it difficult to perform human activities like interpretation and autonomous adaptation. Consequently, as Intel explains, the “key challenges in neuromorphic research are matching a human’s flexibility, and ability to learn from unstructured stimuli with the energy efficiency of the human brain.”

As the basic units of artificial neural networks covered earlier, today’s computational units with neuromorphic computing capabilities are analogous to neurons. Spiking neural networks (SNNs), to give a model example, then organize these units to simulate biological neural networks. Each unit can transmit pulsed signals to specific units independently of others in the network. These signals then change the electrical states of the targeted units.

With this network structure, it is possible to simulate learning processes by encoding information in the transmitted signals and actively remapping the connections (or communication) between units in the networks in response to various stimuli. In fact, the technology is the foundation of what are being called “silicon brains“.

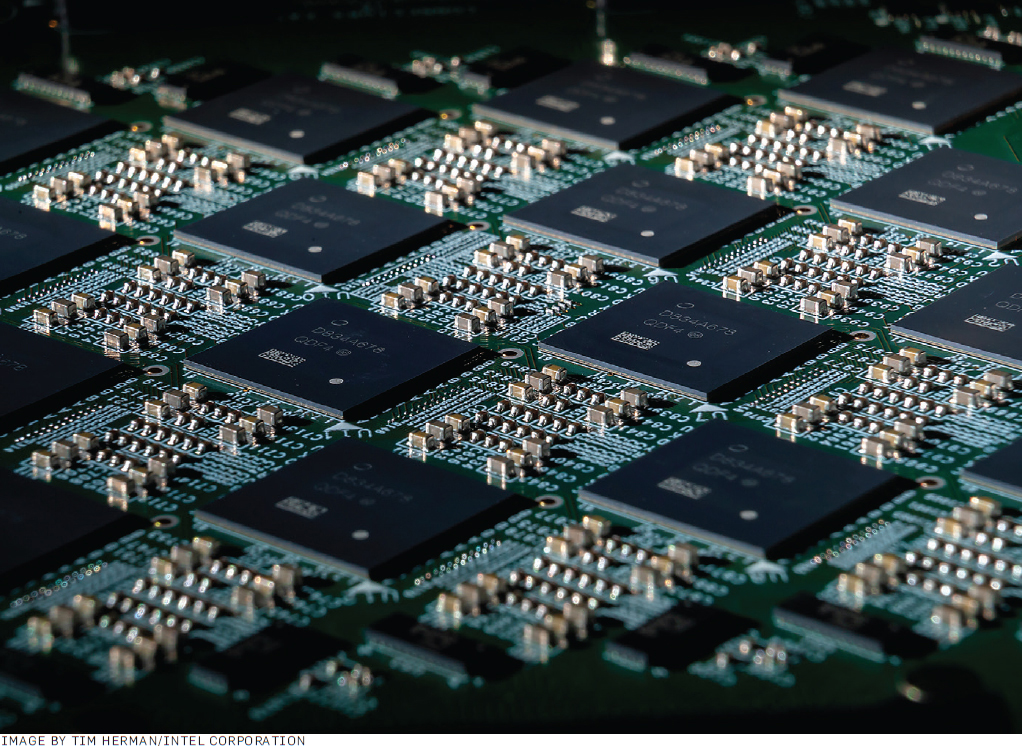

Silicon brains: Neuromorphic chips for artificial human cognition

Back in 2018, research funded by the Defense Advanced Project Agency (DARPA) demonstrated that someone with a brain chip could pilot multiple drones using signals from the brain. At this time, Intel also released their “brain on a chip” called Loihi, which now contains 130,000 neurons optimized for SNNs. In fact, since then, Intel has combined 64 of its Loihi neuromorphic chips to create what they call a “Pohoiki Beach” neuromorphic system with eight million artificial neurons.

Neuromorphic chips are helping meet the artificial neural network objectives of emulating impressive biological energy efficiency. They allow for on-chip, asynchronous processing via event-driven models, leading to significantly reduced energy demands, especially in comparison to the energy consumption observed with most AI and deep learning processes on GPUs. For example, while the human brain has a baseline energy footprint of about 20 watts, a supercomputer can draw up to 200 megawatts.

Beyond energy efficiency, neuromorphic chips allow for more instantaneous activity by processing quickly on-board, rather than relying on external systems. The asynchronous abilities of artificial neurons within a network allow information to quickly propagate through the multiple layers of the network. Once lower layers provide sufficient activity, spikes can propagate immediately to higher layers since each artificial neuron can respond independent of others in the system. When comparing to current deep learning models, Principal Research Staff Member at IBM Zurich Abu Sebastien explains how the chips are “very different from conventional deep learning, where all layers have to be fully evaluated before the final output is obtained.”

As next-generation AI narrows down on silicon brains, artificial human cognition is rapidly becoming a reality. At LeCiiR, our drive to provide enterprises with quality, innovative technological solutions matches our excitement for the continued development of neuromorphic chips in AI, and we love to talk tech. For questions on our services, neuromorphic technology, or any other topics don’t hesitate to contact us and leave your comments.

Recent Comments